Researchers from Sea AI Lab and National University of Singapore Introduce 'PoolFormer': A Derived Model from MetaFormer for Computer Vision Tasks - MarkTechPost

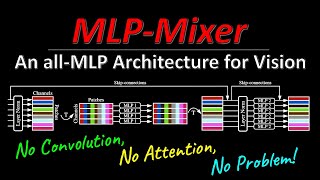

A Useful New Image Classification Method That Uses neither CNNs nor Attention | by Makoto TAKAMATSU | Towards AI

![PDF] Exploring Corruption Robustness: Inductive Biases in Vision Transformers and MLP-Mixers | Semantic Scholar PDF] Exploring Corruption Robustness: Inductive Biases in Vision Transformers and MLP-Mixers | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/b145a25df2457bedfddeecb4be37828e43f6cc80/7-Figure1-1.png)

PDF] Exploring Corruption Robustness: Inductive Biases in Vision Transformers and MLP-Mixers | Semantic Scholar

![Neil Houlsby on Twitter: "[2/3] Towards big vision. How does MLP-Mixer fare with even more data? (Question raised in @ykilcher video, and by others) We extended the "data scale" plot to the Neil Houlsby on Twitter: "[2/3] Towards big vision. How does MLP-Mixer fare with even more data? (Question raised in @ykilcher video, and by others) We extended the "data scale" plot to the](https://pbs.twimg.com/media/E3lGny3WYAIJPpP.jpg:large)

Neil Houlsby on Twitter: "[2/3] Towards big vision. How does MLP-Mixer fare with even more data? (Question raised in @ykilcher video, and by others) We extended the "data scale" plot to the

ImageNet top-1 accuracy of different operator combinations. T, M, and C... | Download Scientific Diagram

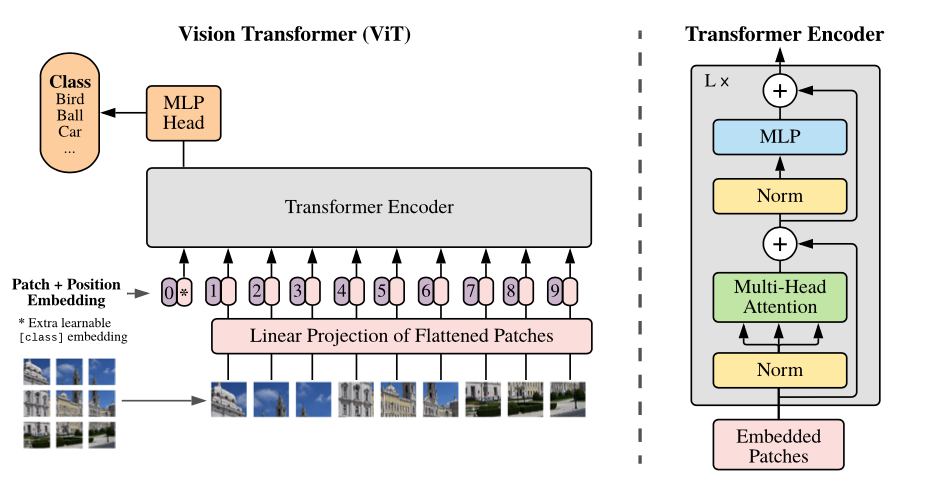

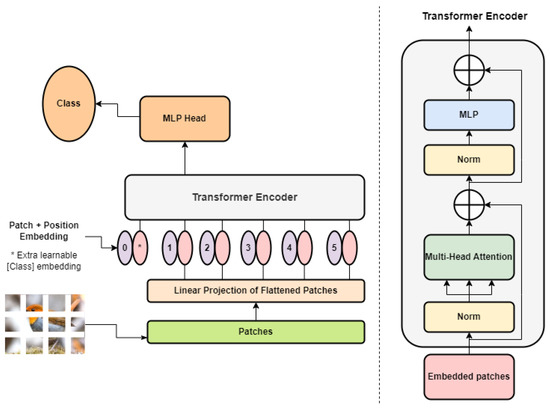

When Vision Transformers Outperform ResNets without Pre-training or Strong Data Augmentations | Papers With Code

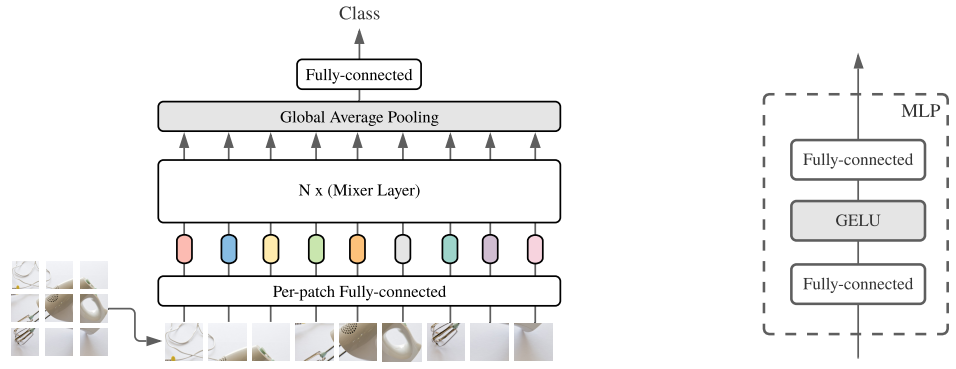

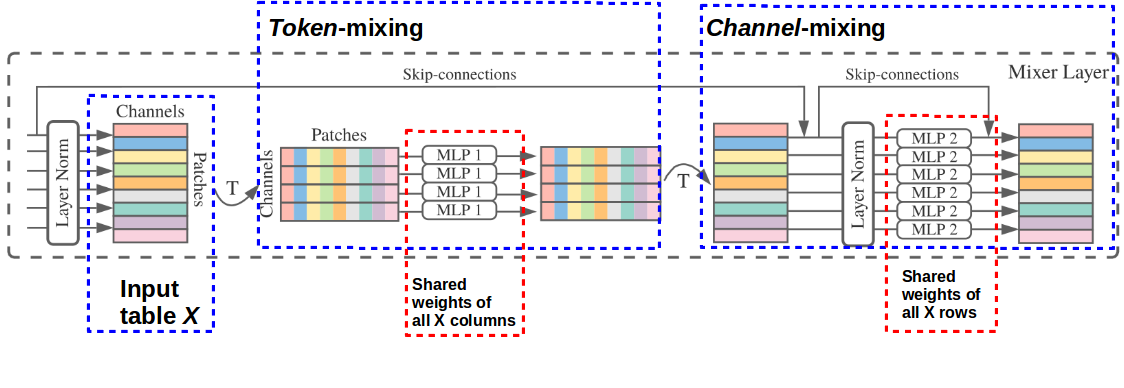

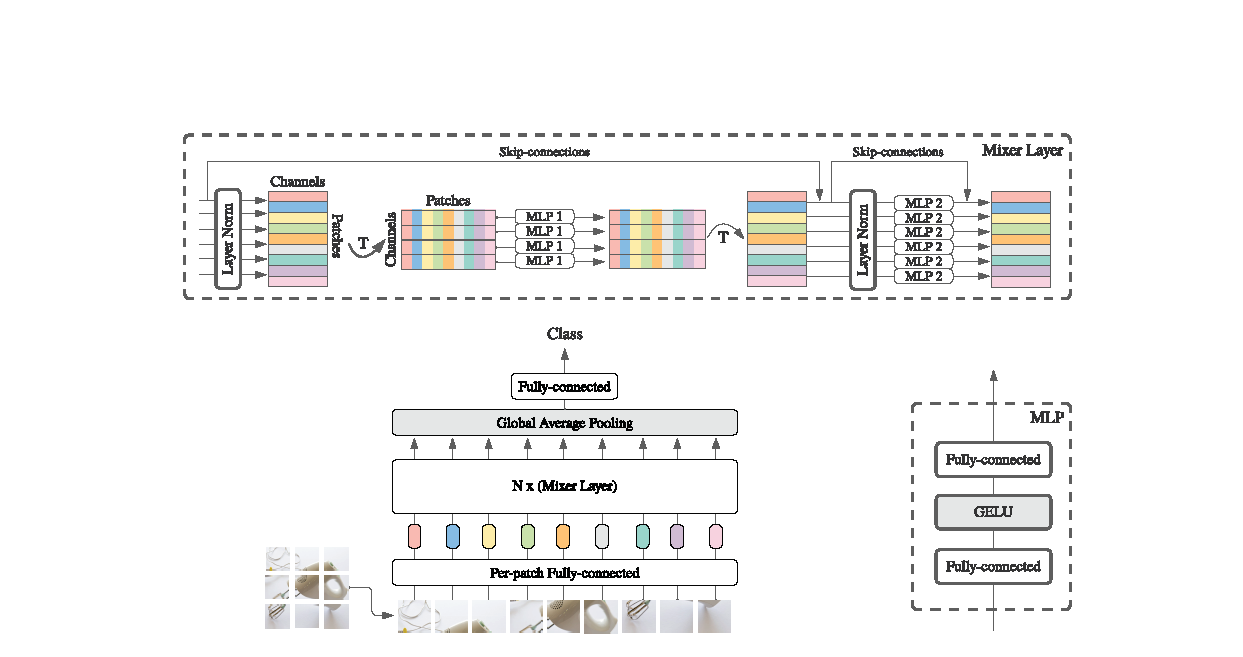

Google Releases MLP-Mixer: An All-MLP Architecture for Vision | by Mostafa Ibrahim | Towards Data Science

![Research 🎉] MLP-Mixer: An all-MLP Architecture for Vision - Research & Models - TensorFlow Forum Research 🎉] MLP-Mixer: An all-MLP Architecture for Vision - Research & Models - TensorFlow Forum](https://discuss.tensorflow.org/uploads/default/original/2X/d/d18627debab539a6f795ebcabfa65faacb00bab8.png)

![2201.12083] DynaMixer: A Vision MLP Architecture with Dynamic Mixing 2201.12083] DynaMixer: A Vision MLP Architecture with Dynamic Mixing](https://ar5iv.labs.arxiv.org/html/2201.12083/assets/x1.png)

![PDF] MLP-Mixer: An all-MLP Architecture for Vision | Semantic Scholar PDF] MLP-Mixer: An all-MLP Architecture for Vision | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/3d4a76a06e771ae450494b60522eab9105709024/6-Figure2-1.png)